Free Speech Obligations of Social Media

by Kasey Mathews | published Feb. 2nd, 2021

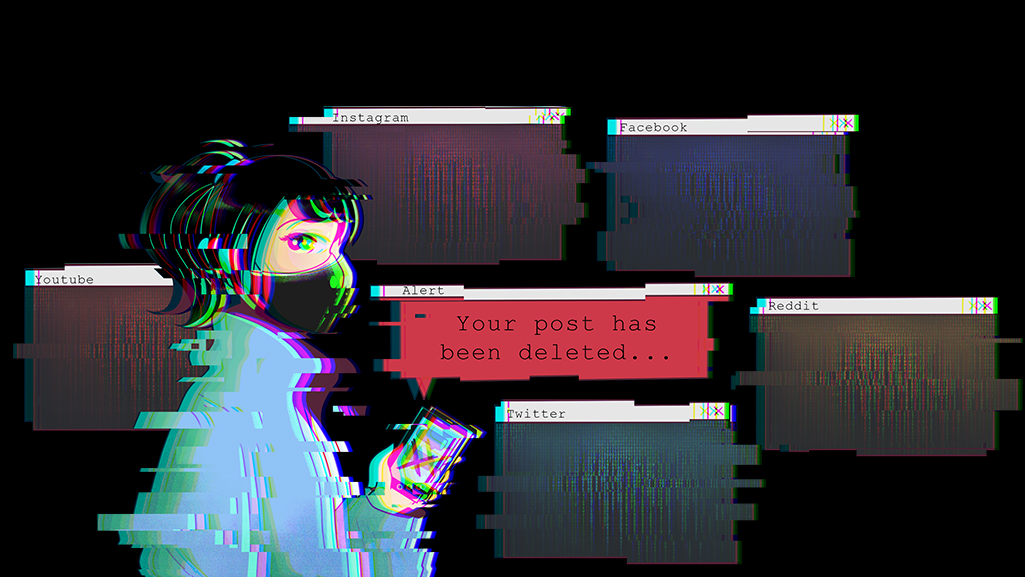

Recently, many social media companies have come under fire for their failure to censor hate speech. As social media platforms are the forum in which many ideas and discussions occur, many feel there is an obligation of social media companies to moderate the types of content being posted and made available to the public.

So what are social media companies doing to combat hate speech and falsities? What will the evolution of this content moderation look like? And who decides what is and isn’t appropriate to post, anyway?

Protecting Our Speech

Hate speech is a sort of gray area at the very edge of our protected freedoms. First, we must understand what exactly qualifies as protected versus unprotected speech according to the First Amendment of the United States.

As Bobby Colon, legal counsel for RIT, put it, “if you look at the United States Constitution and you try to find a definition ... for protected speech and unprotected speech, you’re never gonna find it.”

The First Amendment protects the right of free speech for any person within the U.S. Yet, there is no clear definition for where this protection ends.

Our modern definitions of protected speech have been shaped by multiple court rulings, such as Jacobellis v. Ohio and National Socialist Party of America v. Village of Skokie. Basically speaking, any speech is considered protected until it falls into one of the following five categories: obscene speech, subversive speech, fighting words, defamation and commercial speech.

Speaking Hate

While it is almost completely accepted that hate speech should not be condoned, what exactly qualifies as hate speech is very much up for interpretation.

The United Nations states that hate speech is “any kind of communication in speech, writing or behaviour, that attacks or uses pejorative or discriminatory language with reference to a person or a group on the basis of who they are, in other words, based on their religion, ethnicity, nationality, race, colour, descent, gender or other identity factor.”

However, even this definition leaves much in the gray area. What one might interpret as hate speech could, to another, be just improper or crude.

This makes monitoring hate speech difficult. And when gray areas exist, legally speaking, it’s best to lean on the side of protecting that speech.

If someone wants to change this, Colon said, “We’re going to need a definition of what hate speech is, and it has to be a definition that’s uniformly accepted.”

"We’re going to need a definition of what hate speech is and it has to be a definition that’s uniformly accepted."

It should also be noted that the moment hateful speech falls into one of the aforementioned categories, it is no longer protected. But until that hate speech includes fighting words or subversive speech, for example, it is still protected.

What About Social Media?

Interestingly, these protections only extend to people in public places. According to Colon however, within private realms — whether they be a private residence or business — it is up to the owner to decide the types of speech that they allow.

In social media, this can be seen through the terms and conditions of a platform. These terms can — and often do — regulate types of speech, including hate speech, that appear on the platform.

So, as Colon pointed out, when the argument is made that social media platforms need to be doing more to regulate hate speech and remove it from their platforms, what is actually being said is that these companies aren’t abiding by their own terms and conditions that already ban such speech.

But the complexity of hate speech is largely the reason so many posts fly under the radar. It takes algorithms of great complexity to moderate this type of content accurately.

Due to this, many social media companies employ a manual moderation approach, either independently or to supplement algorithmic moderation. This process can be emotionally taxing, as shown through The Verge’s Feb. 2019 article exploring the trauma that many Facebook moderators undergo.

An Algorithmic Future

As with everything, COVID-19 has led to a significant change to the status quo. As a large amount of misinformation has come forward in the past year regarding the virus, manual moderation has become largely inefficient.

Facebook executive Mark Zuckerberg insists that the future will lie in the hands of artificial intelligence. According to his April 2020 Congressional testimony, he believes that artificial intelligence will one day be able to combat against fake news, hate speech, discrimination and propaganda. However, he failed to specify any sort of expected timeline or framework.

If hate speech is ever to officially and legally enter the realm of unprotected speech, we'll need a combination of a few things. First, we'll need a clearer, more universal definition of hate speech. Second, technology will need to be able to better adapt and understand the nuances of human speech patterns. Finally, the nation would need to agree that hate speech, no matter its intent, is harmful to public discourse. Only then will social media be able to more effectively combat against it.

“Words have impact,” Colon said. “There’s an effect to everything that people say. Some of that impact may be minimal, but some of that impact may be severe.”

The regulation of speech is a complex, often divisive prospect. Only through careful consideration can it be done effectively — even online.