Last fall, Student Government (SG) and RIT launched a course evaluation system integrated with the SG-developed OpenEvals in an effort to give students a platform to review professors and courses and compare them to similar professors and courses. The system has been moderately successful, however getting the students to fill out the evaluations has been the biggest challenge with only 62 percent of students filling them out last fall semester, a drop from 65 percent the semester before.

Some colleges tend to fill them out more than others, with NTID at almost 70 percent for last fall semester and the College of Health Sciences and Technology (CHST) at 46 percent, the highest and lowest completion rate of the evaluations, respectively. With a required 65 percent needed for students to see the information for an individual class (otherwise creating an insufficient sample size), not enough students have been filling them out.

“Students should want to provide feedback, but if it is an average professor that you don’t have anything super good to say or really bad to say, you’re less motivated to fill out an evaluation,” says Andrea Shaver, SG president. "The idea is to try and get students in that middle ground to fill out their evaluations more, hopefully, it would make the results a little less bias."

When discussing why students do not fill out their evaluations, many reasons surface, anything from being too busy during the last few weeks of the semester to just not having anything very positive or negative to say about the professor. Students also do not seem to be very aware that they would be able to see the data if enough people in the class fill them out, a capability which went into effect fall 2015.

Despite numerous incentives, including a campaign to place piñatas around campus if enough students filled out their evaluations and various emails trying to convince students to do so, the percentage of students filling them out still hovers below the threshold. “I think where we really failed was getting students behind this idea of seeing their evaluations,” Shaver explained.

OpenEvals is not completely intuitive, searching requires the exact course numbers and students are unable to search by the professor, for example. The site also does not explain the absence of some professor information, often as a result of safeguards to new professors or as a result of small classes not being a large enough sample size.

As for the future, SG plans to integrate the teacher evaluation information into Tiger Center, so that the evaluation information will be available to students when choosing courses and ideally prompting more students to fill out their evaluations after seeing and using the results themselves. Currently, SG has gained approval from the provost for the project and the Student IT Office is working on integrating the information with Tiger Center; however, the completion date is unknown.

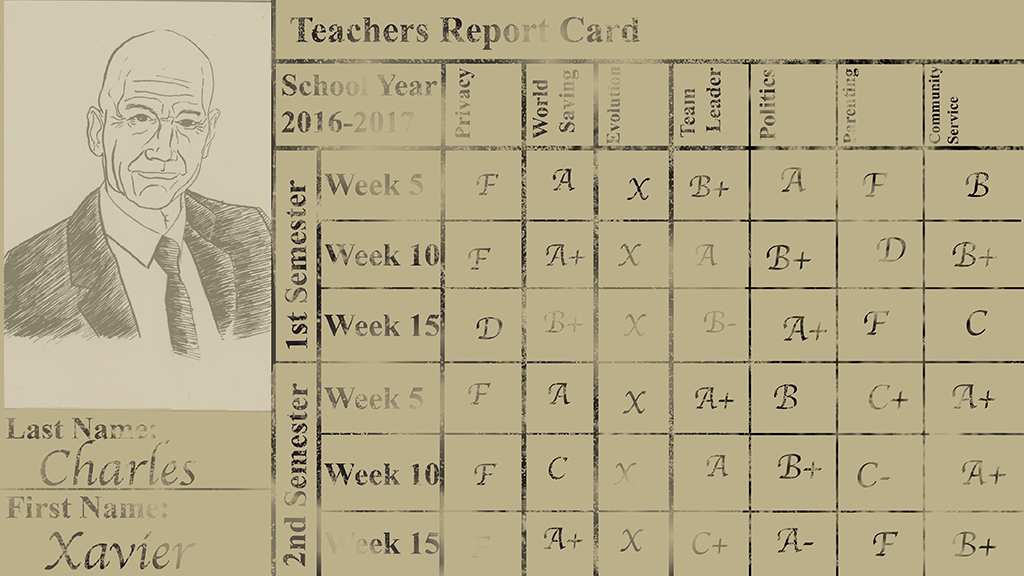

Teaching evaluations have been a very polarizing and debated topic, with some professors not agreeing that student evaluations should be used to grade them, some not wanting students to see what they consider personal data, and other concerns with the effectiveness of evaluations and biases that may be present. There are constant debates and revisions in order to find a middle ground between professor and student concerns.

Regardless, most professors and departments use them as ways to continually critique and improve not only the course but the methods of the professor. "I think feedback from students is what you should use to change your course, because if it benefits students then it’s worth making a change," says Professor Carol Oehlbeck, a math professor and course coordinator for discrete math.

Oehlbeck has made numerous improvements to her classes over the years, including changing the pace of her lectures and overhauling the way she organizes content on MyCourses. Slowing the pace of her course was one of the most important improvements, especially with the influx of deaf students who need more time to process everything when getting information from interpreters, something she did not consider as much before receiving feedback from deaf students.

Even on a higher level, student feedback has created change. Many years ago, the College of Science (COS) was getting very negative feedback for most of their calculus courses, which eventually led to the creation of "Math Crash," a day-long review session for students in math classes; additionally, calculus finals were scheduled the day immediately after Math Crash.

After teacher evaluations leave students’ hands, the professor has to do a self-evaluation and summarize all of the feedback from the students as well as describe a plan on what they did well, what they are going to keep doing, and what they need to change as well as a plan to address how they will make those changes. The department heads then read the professor’s self-evaluation and all of the student evaluations.

From there, professors are rated compared to other professors from other sections, other courses, other departments, and other colleges. Professor evaluations from the department head come directly, at least in part (depending on department), from student evaluations, and department heads could perhaps have the ability to stop a professor from instructing a class again if feedback from students was negative enough, as well as take other actions such as advise them to change certain aspects of their teaching to fit student response. The process is very extensive and student feedback is taken into account at every level, even if some professors do not pay much attention to it.

“Don’t just walk away from that class with those comments, put it in the evaluation. Somebody needs to know about it, so put it in the evaluation with the knowledge that someone is going to see that and perhaps make a change, whether it is the professor or someone higher than the professor. That’s the only way things are going to change,” Oehlbeck concluded.